22 stycznia 2012

Prosty CURSOR

17 listopada 2011

SOA

Including SOA Features in Jdeveloper 11g R1 (11.1.1.1.0)

By rodrigo.jorquera on Jul 09, 2009

The new Jdeveloper’s release doesn’t include the development suite for SOA applications.

There’s an extension for that and can be downloaded from OTN.

The link to download the file (called Oracle SOA Composite Editor):

http://www.oracle.com/technology/products/jdev/101/update/fmw_products.xml

To download the file directly:

http://download.oracle.com/otn/java/jdeveloper/111/extensions/soa-jdev-extension.zip

After download the file, you can install it using the Check for update option in Jdeveloper.

As you can see in the following screenshot, the option is available under the Help menu.

Then select the option “Install From Local File”, and select the location where you put the zip file.

After that you will see that the Oracle SOA Composite Editor will be installed and Jdeveloper will restart.

To verify the installed extension, You can go to File -> New section and you will see the new option “SOA Tier”.

If you chose that you will have the following templates as an option:

Then, you are ready to work in your SOA project!.

źródło:

http://blogs.oracle.com/rodrigo/entry/including_soa_features_in_jdev

18 października 2011

1 września 2011

Oracle Database – auditing.

AUDIT SELECT, INSERT, UPDATE, DELETE ON HR.P_LISTA;

COMMIT;

AUDIT SELECT, INSERT, UPDATE, DELETE ON HR.P_LISTA BY ACCESS;

NOAUDIT SELECT, INSERT, UPDATE, DELETE ON HR.P_LISTA;

AUDIT INSERT, UPDATE, DELETE ON HR.P_LISTA BY ACCESS;

The user TSUTTON had auditing set up BY SESSION and we see that the ACTION_NAME appears as ‘SESSION REC’ in each case, which shows that auditing is being done at the session level. The user BWILLIAMS had auditing set at the access level, and here we see a listing of every insert and delete, along with the timestamp of the action, even though several inserts were performed on the same table in the same session.

Źródła:

http://www.dbspecialists.com/december-2003.html

http://download.oracle.com/docs/cd/B10500_01/server.920/a96521/audit.htm

18 maja 2011

ORA-04030

Błąd pojawił się przy generowaniu raportu.

This ORA-04030 can be caused by a shortage of RAM on a dedicated (non shared server) environment, a too small PGA, and by not setting kernel parameters large enough to allow enough RAM. The ORA-04030 is also common when running an import. See import ORA-04030 error tips.

Also see MOSC Note 233869.1 titled “Diagnosing and Resolving ORA-4030 errors”.

Essentially the ORA-04030 is clear, and “out of process memory” error, whereby Oracle cannot get the RAM needed to complete the operations. Depending on your release of Oracle, you have several options:

- Increase pga_aggregate_target

- Decrease sort_area_size and/or hash_area_size

- Move to multi-threaded server (a.k.a. MTS or shared servers)

Aktualna wartość pga_aggregate_target to 1073741824 (1 GB), sort_area_size: 1048576 (1 MB)

sql>create pfile from spfile

plik powstaje w c:\oracle\ora92\database\INITpersonel.ora

zmieniam pga_aggregate_target na 1610612736 (1,5 GB)

sql>shutdown immediate

sql>create spfile from pfile

sql>startup

PGA_AGGREGATE_TARGET specifies the target aggregate PGA memory available to all server processes attached to the instance.

Setting PGA_AGGREGATE_TARGET to a nonzero value has the effect of automatically setting the WORKAREA_SIZE_POLICY parameter to AUTO. This means that SQL working areas used by memory-intensive SQL operators (such as sort, group-by, hash-join, bitmap merge, and bitmap create) will be automatically sized. A nonzero value for this parameter is the default since, unless you specify otherwise, Oracle sets it to 20% of the SGA or 10 MB, whichever is greater.

22 kwietnia 2011

(K1)

Oracle OLTP features.

Nonescalating row-level locking

Multiversion read consistency - Oracle provides statement-level and transaction-level data consistency withoutrequiring read locks. Oracle uses rollback segments toreproduce data as it existed at the time the query started.

Shared SQL - The parsing of a SQL statement is fairly CPU-intensive. Oracle caches parsedand optimized SQL statements in the shared SQL area within the shared pool.

Bind Variables and Shared SQL

Oracle’s shared SQL is a key feature for building high-performanceapplications. In an OLTP application, similar SQL statements may be used repeatedly,but each SQL statement submitted will have different selection criteria contained in theWHERE clause to identify the different sets of rows on which to operate. Oracle canshare SQL statements, but the statements must be absolutely identical.To take advantage of this feature for statements that are identical except for specificvalues in a WHERE clause, you can use bind variables in your SQL statements. Thevalues substituted for the bind variables in the SQL statement may be different, but thestatement itself is the same.

Youcan write the application to use bind variables

UPDATE emp SET salary = salary * (1 + :v_incr)

WHERE empno = :v_empno;

Stored outlines - The route a SQL statement takes during executionis critical for high performance. Once application developers and DBAshave tuned a SQL statement for maximum efficiency, they can force the Oracleoptimizer to use the same execution plan regardless of environmental changes.

Multi-Threaded Server/shared server – allow Oracle to support larger user populations.

Database Resource Manager - The DRM allocates CPU and parallelism resources to consumer groups based on resource plans. For example, you can allocate 80 percent of the CPU resources to order-entry users, with the remaining 20 percent allocated to users asking for reports. If the order-entry users aren’t using all the allocated resources, the reporting users can use more than their allotted percentage. With the DRM, you can dynamically alter the details of the plan without shutting down the instance. Oracle9i also added to the Database Resource Manager the ability to proactively estimate the amount of CPU that an operation will require. If an operation looks as if it will exceed the maximum CPU time specified for a resource group, the operation will not be executed, which can prevent inappropriately large operations from even starting.

The DRM now allows a DBA to specify the number of active sessions availableto a consumer group.

Real Application Clusters (235)

20 kwietnia 2011

(K1)

The I/O performed by most OLTP systems tends to beapproximately 70–80 percent read and 20–30 percent write.

Business intelligence data warehousing systems and OLTP systems could accessmuch of the same data, but these types of systems also typically have differentrequirements in terms of CPU, memory, and data layout, which makes supporting amixed workload less than optimal for both types of processing.

Real ApplicationClusters, with dynamic service provisioning since Oracle Database 10g, makes it possibleto allocate individual nodes for individual workloads.

Oracle7 introduced stored procedures written in PL/SQL, Oracle’s proprietary languagefor writing application logic. These procedures are stored in the database andexecuted by clients issuing remote procedure calls (RPCs) as opposed to executingSQL statements. Instead of issuing multiple SQL calls, occasionally with intermediatelogic to accomplish a task, the client issues one procedure call, passing in therequired parameters. The database executes all the required SQL and logic using theparameters it receives.

If the database server failswhile executing some transaction, the application server can resubmit the transactionto a surviving database server, as control of the transaction lies with theapplication server.

dbverify

dbverify

dbv file=USER01.DBF blocksize=8192

select status from v$datafile;

select status from dba_tablespaces;

recover datafile 9;

przeniesienie pliku

shutdown immediate;

cp plik do nowej lokalizacji

startup mount

alter database rename file ‘sfasfaf’ to ‘fasdfas ‘

alter database open

alter tablespace USERS add datafile ‘f:fsdfs\dsafas.dbf’ size=1024M autoextend=0n;

5 kwietnia 2011

4 kwietnia 2011

Dafault value (alter table – oracle)

alter table PROBY modify (“WYDANO” FLOAT(126) DEFAULT 0)

1 kwietnia 2011

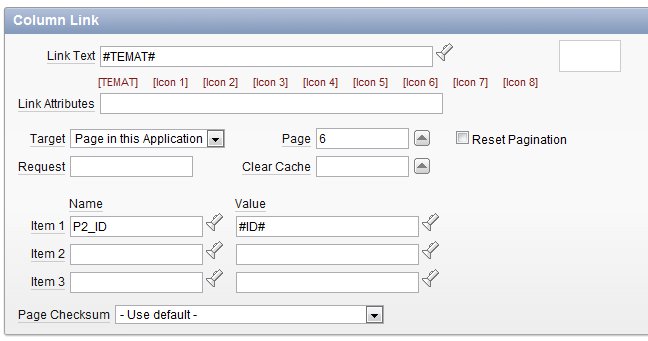

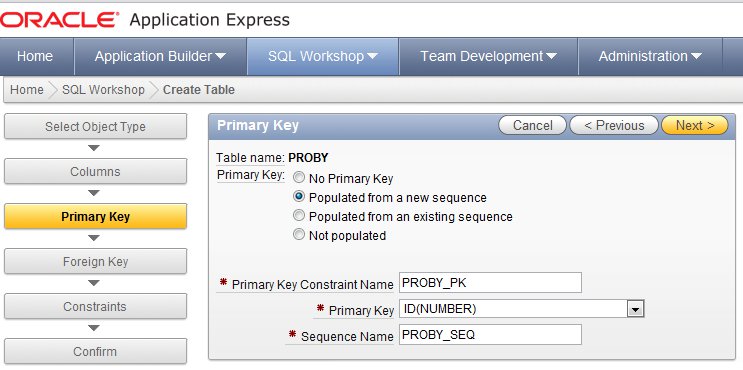

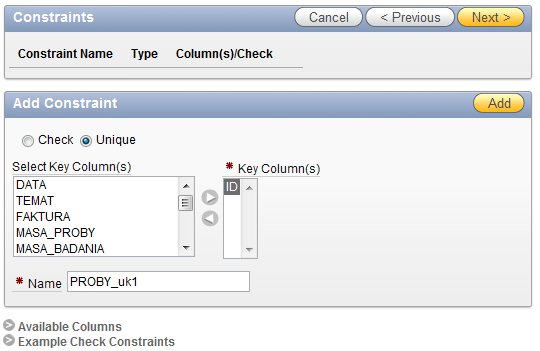

ApEx – primary key

CREATE table “PROBY” (

“ID” NUMBER(7) NOT NULL,

“DATA” DATE,

“TEMAT” VARCHAR2(250),

“FAKTURA” VARCHAR2(200),

“MASA_PROBY” NUMBER(7),

“MASA_BADANIA” NUMBER(7),

“MASA_PO_BADANIACH” NUMBER(7),

“W_ZAMRAZARCE” NUMBER(7),

“DLA_PRACOWNIKOW” NUMBER(7),

“WARTOSC” NUMBER(7),

“WARTOSC_PO_BADANIACH” NUMBER(7),

“ODPADY” NUMBER(7),

“ODPOWIEDZIALNY” VARCHAR2(150),

constraint ”PROBY_PK” primary key (“ID”)

)

/

CREATE sequence “PROBY_SEQ”

/

CREATE trigger “BI_PROBY”

before insert on “PROBY”

for each row

begin

if :NEW.”ID” is null then

select “PROBY_SEQ”.nextval into :NEW.”ID” from dual;

end if;

end;

/

alter table “PROBY” add

constraint “PROBY_UK1″

unique (“ID”)

/

Aby zmienić home page:

Go to shared component.

Click on Edit Security Attribute under Secuirty

In Home Link text box… you will find something smilar to f?p=&APP_ID.:1:&SESSION.

After &APP_ID. you see a number , that number denotes home page.. in the above example 1 denotes the home page change 1 to 4 if you want page 4 to be ur home page

Oracle on VMware

Źródła

http://www.vmware.com/solutions/partners/alliances/oracle-database.html

http://www.vmware.com/solutions/partners/alliances/oracle-vmware-support.html

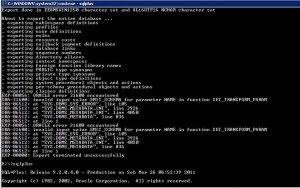

EXP-00008: Oracle error 942 encountered

EXP-00008: Oracle error 942 encountered

ORA-00942: table or view does not exist

EXP-00024: Export views not installed, please notify your DBA

EXP-00000: Export terminated unsuccessfully

Run the $oracle_home/rdbms/admin/catexp.sql script as sysdba

EXP-00056: Oracle error 31600 encountered

ORA-31600: invalid input value EMIT_SCHEMA for parameter NAME in function SET_TRANSFORM_PARAM

ORA-06512: at “SYS.DBMS_SYS_ERROR”, line 105

It appears that the Postinstall Actions were not completed after the 9.2.0.4 patch set was applied.

So the solution is:

1) Cleanly shutdown the database.

2) Connect to the database using the SYS/password as SYSDBA account.

3) Startup the database using command:

STARTUP MIGRATE <<< This starts up the database in restricted mode.

4) Run the catpatch.sql script from the $ORACLE_HOME\rdbms\admin directory.

5) Cleanly shutdown the database.

6) Startup the database with the command:

STARTUP

7) Perform another export of the database.

Źródła:

http://www.dbasupport.com/forums/archive/index.php/t-42811.html

12 marca 2011

Oracle, mulituser concurrency – part 2 (K1)

Update tabeli

1. The client modifies the employee name on the screen. The client process sends a

SQL UPDATE statement over the network to the server process.

2. The server process obtains a System Change Number and reads the data block

containing the target row.

3. The server records row lock information in the data block.

4. The server writes the old image of the data to the redo buffers in memory, and

then writes the changes to a rollback segment and modifies the employee data,

which includes writing the SCN to the ORA_ROWSCN pseudocolumn in Oracle

Database 10g or newer database releases.

5. The server process writes the redo buffers to disk, and then writes the rollback

segments and the changed data to disk. The rollback segment changes are part of

the redo, since the redo log stores all changes coming from the transaction.

6. The HR clerk commits the transaction.

7. Log Writer (LGWR) writes the redo information for the entire transaction,

including the SCN that marks the time the transaction was committed, from the

redo log buffer to the current redo log file on disk. When the operating system

confirms that the write to the redo log file has successfully completed, the transaction

is considered committed.

8. The server process sends a message to the client confirming the commit.

A confilicting write operation

1. Client A modifies the employee name on the screen. Client A sends a SQL

UPDATE statement over the network to the server process.

2. The server process obtains an SCN for the statement and reads the data block

containing the target row.

3. The server records row lock information in the data block.

4. The server process writes the changes to the redo log buffer.

5. The server process copies the old image of the employee data about to be

changed to a rollback segment. Once the server process has completed this

work, the process modifies the employee data, which includes writing the SCN

to the ORA_ROWSCN pseudocolumn in Oracle Database 10g or newer database

releases.

6. Client B modifies the employee name on the screen and sends a SQL UPDATE

statement to the server.

7. The server process obtains an SCN and reads the data block containing the target

row.

8. The server process sees that there is a lock on the target row from the information

in the header of the data block, so it takes one of two actions. If the

isolation level on Client B’s transaction is READ COMMITTED, the server

process waits for the blocking transaction to complete. If the isolation level for

Client B’s transaction is SERIALIZABLE, an error is returned to the client.

9. Client A commits the transaction, the server process takes the appropriate

action, and the server sends a message to Client A confirming the commit.

10. If Client B executed the SQL statement with the READ COMMITTED isolation

level, the SQL statement then proceeds through its normal operation.

Read and write operation.

1. Client A sends a SQL SELECT statement over the network to the server process.

2. The server process obtains an SCN for the statement and begins to read the

requested data for the query. For each data block that it reads, it compares the

SCN of the SELECT statement with the SCNs for any transactions for the relevant

rows of the data block. If the server finds a transaction with a later SCN

than the current SELECT statement, the server process uses data in the rollback

segments to create a “consistent read” version of the data block, current as of the

time the SELECT was issued. This is what provides the multiversion read consistency

(MVRC) and avoids the need for Oracle to use read locks on data. If a row

has been updated since the transaction started, Oracle simply gets the earlier version

of the data for a consistent view.

3. Client B sends a SQL UPDATE statement for a row in the EMP table that has

not yet been read by Client A’s SELECT statement. The server process gets an

SCN for the statement and begins the operation.

4. Client B commits his changes. The server process completes the operation,

which includes recording information in the data block that contained the modified

row that allows Oracle to determine the SCN for the update transaction.

5. The server process for Client A’s read operation comes to the newly modified

block. It sees that the data block contains changes made by a transaction that

has an SCN that is later than the SCN of the SELECT statement. The server process

looks in the data block header, which has a pointer to the rollback segment

that contains the data as it existed when Client A’s transaction started. The rollback

segment uses the old version of the data to create a version of the block as

it existed when the SELECT statement started. Client A’s SELECT statement

reads the desired rows from this consistent version of the data block.

Oracle is one of the only databases on the market that doesn’t use read locks, Oracle will essentially always deliver the lowest amount of database contention.

A workspace is a way to isolate data from changes in the general database environment. Workspace Manager accomplishes this by creating workspace-specific versions of data. When youcreate a workspace, you essentially create a snapshot of the data in the workspace at a specific point in time. Further changes to that data from outside the workspace do not affect the view of the data in the workspace, and changes made to data within the workspace are not seen by users outside the workspace. Changes to data within a workspace are visible only to other workspace. Workspaces allow youto essentially create separate data environments for specialized usage.

The key to workspaces is the support of multiple versions of the same data. To use workspaces to version data in a table, youmu st first enable the table for versioning. Workspace Manager can version-enable one or more user tables in the database. users. The unit of versioning is a row. Versioned rows are stored in the same table as the original rows. Oracle implements workspaces by adding metadata to the rows of a table. The timestamp allows users in a workspace to go back to any point in time and view the database from the perspective of changes made in that workspace up to another point in time.

Workspace operations:

Rollback

Youcan roll back changes to a workspace to return the workspace to the point

in time when the workspace was created. Youcan also designate savepoints,

which allow you to roll back the changes in a workspace to a subsequent point

in time.

Refresh

Refreshing a workspace means bringing the data in a workspace into agreement

with the same data in the overall database.

Merge

A merge operation rolls changes made in a workspace into its parent workspace.

Transaction Processing

ACID properties of a transaction:

Atomic

The entire transaction succeeds or fails as a complete unit.

Consistent

A completed transaction leaves the affected data in a consistent or correct state.

Isolated

Each transaction executes in isolation and doesn’t affect the states of others.

Durable

The changes resulting from committed transactions are persistent.

OLTP

High transaction volumes and large user populations. Online transaction processing implies direct and conversational interaction between the transaction processing system and its users. (223)

11 marca 2011

Oracle performance, part 3. Multiuser Concurrency (K1)

DRM (Database Resource Manager) works by leveraging consumer groups you’ve identified and enabling you to place limits on the amount of computer resources that can be used by that group. (Predicting resource utilization, Switching consumer groups, Limiting number of connections)

Multiuser Concurrency

Database systems must protect data integrity.

The transaction is the bedrock of data integrity in multiuser databases and the foundation of all concurrency schemes. A transaction is defined as a single indivisible piece of work that affects some data. Once a transaction is committed, the changes made by that transaction become permanent and are made visible to other transactions and other users.

Transactions always occur over time, although most transactions occur over a very short period of time. Since the changes made by a transaction aren’t official until the transaction is committed, each individual transaction must be isolated from the effects of other transactions. The mechanism used to enforce transaction isolation is the lock. A lock prevents users from modifying data.

Aby zapobiec możliwości nadpisania zmian jednej transakcji przez drugą równoczesną ale np. dłużej trwającą transakcję Oracle używa dwóch rodzajów blokad (locks):

An exclusive lock (inaczej write lock) is applied and held while changes are made to data in the course of a transaction and released when the transaction is ended by either a COMMIT or a ROLLBACK statement. A write lock can be held by only one user at a time, so only one user at a time can change that data.

A read lock (shared lock) can be held by any number of users who are merely reading the data, since the same piece of data can be shared among many readers. However, a read lock prevents a write lock from being placed on the data, as the write lock is an exclusive lock. Oracle uses read locks only when a SQL operation specifically requests them with the FOR UPDATE clause in a SELECT statement.

The interference caused by conflicting locks is called contention.

Serialization – serializable transactions appear as though they have been executed in a series of distinct, ordered transactions. When one transaction begins, it is isolated from any changes that occur to its data from subsequent transactions. To the user, a serializable transaction looks as though it has the exclusive use of the database for the duration of the transaction. Serializable transactions are predictable and reproducible, the two cardinal virtues of data integrity.

Multiversion Read Consistency MVRC – Multiversion read consistency guarantees that a user sees a consistent view of the data she requests. If another user changes the underlying data during the query execution, Oracle maintains a version of the data as it existed at the time the query began. If there were transactions underway but uncommitted at the time the query began, Oracle will ensure that the query ignores the changes made by those transactions. The data returned to the query will reflect all committed transactions at the time the query started.

This feature has two dramatic effects on the way queries impact the database. First, Oracle doesn’t place any locks on data for read operations. This means that a read operation will never block a write operation. A user gets a complete “snapshot” view of the data, accurate at the point in time that the query began.

Isolation levels - describe how a transaction will interact with other transactions and how it will be isolated from other transactions.

An application programmer can set an isolation level at the session level (ALTER SESSION) or transaction level (SET TRANSACTION).

READ COMMITTED – Enforces serialization at the statement level. This means that every statement will get a consistent view of the data as it existed at the start of that statement. However, since a transaction can contain more than one statement, it’s possible that nonrepeatable reads and phantom reads can occur within the context of the complete transaction. The READ COMMITTED isolation level is the default isolation level for Oracle.

SERIALIZABLE – Enforces serialization at the transaction level. This means that every statement within a transaction will get the same consistent view of the data as it existed at the start of the transaction.

READ ONLY – this level explicitly prohibits any write operations and provides an accurate view of all the data at the time the transaction began.

Because of their differing spans of control, these two isolation levels also react differently when they encounter a transaction that blocks their operation with an exclusive lock on a requested row. Once the lock has been released by the blocking transaction, an operation executing with the READ COMMITTED isolation level will simply retry the operation. Since this operation is concerned only with the state of data when the statement begins, this is a perfectly logical approach.

On the other hand, if the blocking transaction commits changes to the data, an operation executing with a SERIALIZABLE isolation level will return an error indicating that it cannot serialize operations. This error makes sense, because the blocking transaction will have changed the state of the data from the beginning of the SERIALIZABLE transaction, making it impossible to perform any more write operations on the changed rows. In this situation, an application programmer will have to add logic to his program to return to the start of the SERIALIZABLE transaction and begin it again.

Lost updates

The most common type of integrity problem occurs when two writers are both

changing the same piece of data, and one writer’s changes overwrite the other

writer’s changes. This is the problem that exclusive locks are designed to prevent.

Dirty reads

Occur when a database allows a transaction to read data that has been changed

by another transaction but hasn’t been committed yet. The changes made by the

transaction may be rolled back, so the data read may turn out to be incorrect.

Many databases allow dirty reads to avoid the contention caused by read locks.

Nonrepeatable reads

Occur as a result of changes made by another transaction. One transaction

makes a query based on a particular condition. After the data has been returned

to the first transaction, but before the first transaction is complete, another

transaction changes the data so that some of the previously retrieved data no

longer satisfies the selection condition. If the query were repeated in the same

transaction, it would return a different set of results, so any changes made on the

basis of the original results may no longer be valid. Data that was read once can

return different results if the data is read again later in the same transaction.

Phantom reads

Also occur as a result of changes made by another transaction. One transaction

makes a query based on a particular condition. After the data has been returned

to the first transaction, but before the first transaction is complete, another

transaction inserts into the database new rows that meet the selection criteria for

the first transaction. If the first SQL statement in a transaction returned the

number of rows that initially satisfied the selection criteria, and then performed

an action on the rows that satisfied the selection criteria later in the transaction,

the number of rows affected would be different from the initial number of rows

indicated, based on the inclusion of new phantom rows.

Oracle Concurrency Features - three features are used by Oracle to implement multiversion read consistency.

Rollback segments – are structures in the Oracle database that store “undo” information for transactions in case of rollback. When a transaction starts changing some data in a block, it first writes the old image of the data to a rollback segment. A rollback segment is different from a redo log. The redo log is used to log all transactions to the database and recover the database in the event of a system failure, while the rollback segment provides rollback for transactions and read consistency. Blocks of rollback segments are cached in the System Global Area just like blocks of tables and indexes.

To preserve the integrity of the data in the database and enforce any type of serialization, it is critical to keep track of the order in which actions were performed. Oracle uses the System Change Number as an absolute determinant of the order of transactions. Oracle uses the SCN information in the redo log to reproduce transactions in the original and correct order when applying redo. Oracle also uses the SCN to determine when to clean up information in rollback segments that are no longer needed.

Since Oracle Database 10g, there is a pseudocolumn on each row that

contains the SCN, ORA_ROWSCN. You can use this to quickly determine

if a row has been updated since it was retrieved by comparing

the value read from this pseudocolumn at the start of a transaction

with the value read from this pseudocolumn at the end of the

transaction.

Locks in data blocks – A database must have a way of determining if a particular row is locked. Most

databases keep a list of locks in memory, which are managed by a lock manager

process. Oracle keeps locks with an area of the actual block in which the row is

stored. A data block is the smallest amount of data that can be read from disk for

an Oracle database, so whenever the row is requested, the block is read, and the

lock is available within the block.

Nonescalating row locks – other databases will sometimes escalate locks to a higher level of granularity within the database (na całą tabelę). With Oracle, the

lock indicator is stored within the data block itself, so there is no increase in overhead for a lock manager when the number of locks increases. Consequently, there is never any need for Oracle to escalate a lock.

A lock manager called the Distributed Lock Manager (DLM) has historically been used with Oracle Parallel Server to track locks across multiple instances of Oracle. This is a completely different and separate locking scheme that doesn’t affect the way Oracle handles row locks. The DLM technology used in Oracle Parallel Server was improved and integrated into a core product in Oracle9i, Real Application Clusters. (213)

Also occur as a result of changes made by another transaction. One transaction

makes a query based on a particular condition. After the data has been returned

to the first transaction, but before the first transaction is complete, another

transaction inserts into the database new rows that meet the selection criteria for

the first transaction. If the first SQL statement in a transaction returned the

number of rows that initially satisfied the selection criteria, and then performed

an action on the rows that satisfied the selection criteria later in the transaction,

the number of rows affected would be different from the initial number of rows

indicated, based on the inclusion of new phantom rows.

10 marca 2011

Oracle perforamance – part 2 (K1)

Partition based parallelism.

Atable with 12 partitions (for example, one partition for each month of the year) canhave a maximum number of 12 PEs for an update or delete. An update to only onemonth of data would have no parallelism because it involves only one partition.

Oracle can execute an INSERT statement of the form INSERT INTO tableXSELECT…FROM tableY in parallel for nonpartitioned and partitioned tables.

Memory resources.

An Oracle instance uses the database server’s memory resources to cache theinformation accessed to improve performance. Oracle utilizes an area of shared memory called the System Global Area (SGA) and a private memory area for each server process called the Program Global Area (PGA).

Oracle Database 11g adds automatic memory management of the SGA and PGA.

Oracle uses the SGA for the following operations:

Caching of database blocks containing table and index data in the database buffer cache

Caching of parsed and optimized SQL statements, stored procedures, and data dictionary information in the shared pool

Buffering of redo log entries in the redo log buffer before they’re written to disk.

Using automatic shared memory management, the database automatically allocates memory for the following SGA pools: database buffer cache, shared pool, large pool, Java pool, and Streams pool. Youhave to specify only the total amount of memory required by setting the SGA_TARGET initialization parameter.

You canalso specify the minimum amount of memory for any of the SGA pools while usingautomatic SGA sizing using the following initialization parameters: DB_CACHE_SIZE, SHARED_POOL_SIZE, LARGE_POOL_SIZE, JAVA_POOL_SIZE, and STREAMS_POOL_SIZE. A few SGA pools, specified using such parameters as LOG_BUFFER, DB_KEEP_CACHE_SIZE, and DB_RECYCLE_CACHE_SIZE, are still manually sized.

The Databae Buffer Cache DB_CACHE_SIZE

If youdecide to disable SGA_TARGET by setting it to 0, youwill need to manuallyset initialization parameters for the memory pools (unless you want to use previoussizes). For the database buffer cache, you would assess the percentage of the databaseblocks requested by users read from the cache versus from the disk. Thispercentage is termed the hit ratio. If response times are too high and this ratio islower than 90% (as a rule of thumb), increasing the value of the initialization parameter DB_CACHE_SIZE can increase performance.

Not all operations read from the database buffer cache: large full-table scans are limited to a small number of buffers to avoid adversely impacting other users by dominating the cache. Parallel table scans completely bypass thebuffer cache.

The Shared Pool SHARED_POOL_SIZE

The shared pool is accessed to cache the SQL sent to the database and for the data dictionary information required to executethe SQL.

Because of its central role in database operations, a shared pool that is too small may have a greater impact on performance than a database buffer cache that is too small.

A shared pool that is too small will cause poor performance for a variety of reasons,

affecting all users. These reasons include the following:

• Not enough data dictionary information can be cached, resulting in frequent

disk access to query and update the data dictionary.

• Not enough SQL can be cached, leading to memory “churn,” or the flushing of

useful statements to make room for incoming statements. A well-designed application

issues the same statements repeatedly.

• Not enough stored procedures can be cached, leading to similar memory churn

and performance issues for the program logic stored and executed in the

database.

Shared pool sizes inthe 150–250 MB range are not uncomm on for large, active databases.

The redo log buffer LOG_BUFFER

While the redo log buffer consumes a very small amount of memory in the SGA relative to the database buffer cache and the shared pool, it’s critical for performance. The redo log buffer is flushed to the redologs on disk when a transaction is committed (normally) or when the redo log bufferis one-third full. In a busy database, transactions may generate enough redo to fill theremaining unfenced portion of the redo log buffer before the I/O to the disks for thefenced area of the redo log buffer is complete. If this happens, the transactions willhave to wait for the I/O to complete because there is no more space in the redo logbuffer. This situation can impact performance.

The statistic “redo buffer allocation retries” can be used to understand this situation.

SELECT name, value FROM V$SYSSTAT WHERE name = ‘redo buffer allocation retries’;

Ideally, the value of “redo bufferallocation retries” should be close to 0.

PGA

Each server has a Program Global Area (PGA), which is a private memory area thatcontains information about the work the server process is performing. There is onePGA for each server process.

The PGA consists of a working memory area (nie można ustawiać rozmiaruO for things such as temporary variables used by the server process, memory for information about the SQL the server process is executing, and memory for sorting rows as part of SQL execution.

Memory for SQL statements

When a server process executes a SQL statement for a user, the server process tracks the session-specific details about the SQL statement and the progress by executing it in a piece of memory in the PGA called a private SQL area, also known as a cursor. The private SQL area contains the session-specific information about the executionof the SQL statement within the session, such as the number of rows retrieved so far.

OLTP systems typically have a “working set” of SQL statements that each user submits. For example, a user who enters car rental reservations uses the same forms inthe application repeatedly. Performance will be improved if the user’s server processhas enough memory in the PGA to cache the SQL those forms issue.

Memory for sorting within the PGA

Each server process uses memory in its PGA for sorting rows before returning themto the user. If the memory allocated for sorting is insufficient to hold all the rows that need to be sorted, the server process sorts the rows in multiple passes called runs. The intermediate runs are written to the temporary tablespace of the user, whichreduces sort performance because it involves disk I/O. Sizing the sort area of the PGA was a critical tuning point in Oracle database releasesprior to Oracle Database 10g.

As of Oracle Database 10g, the database provides automatic sizing for the PGA. Bydefault, this memory management is enabled, and sizing for PGA work areas is basedon 20 percent of the SGA memory size.

TimesTen

Baza danych całkowicie w pamięci RAM. TimesTen is mostappropriate for a high-load OLTP environment that requires extremely high throughputand real-time responsiveness.

A TimesTen instance can be used as a cache for an Oracle database. You load a subset of Oracle tables into the TimesTen instance, and the Cache Connect to Oraclefeature keeps the data synchronized.

CPU

Poorly written SQL is the number one cause of performance problems.

Oracle Database 10g introduced the SQL Tuning Advisor, a tool that can notonly recognize poorly written SQL, but also create an optimizer plan to circumventthe problem and replace the standard optimization plan with the improvedplan.

If your application isn’t using bind variables, the database will have to parse every statement it receives.(203)

7 marca 2011

Oracle import, export.

Disable archive log mode – np. przed Importem

Shutdown edit pfile / spfile (spfile when instance is up of course) to say log_archive_start = false.

startup mount

alter database noarchivelog;

alter database open;

Remember that once you disable archive log mode you need to take a fresh backup once archive log mode is turned back on.

Object Already Exists

If a database object to be imported already exists in the database, an object creation error occurs. What happens next depends on the setting of the IGNORE parameter.

If IGNORE=n (the default), the error is reported, and Import continues with the next database object. The current database object is not replaced. For tables, this behavior means that rows contained in the export file are not imported.

If IGNORE=y, object creation errors are not reported. The database object is not replaced. If the object is a table, rows are imported into it. Note that only object creation errors are ignored; all other errors (such as operating system, database, and SQL errors) are reported and processing may stop.

Caution:

Specifying IGNORE=y can cause duplicate rows to be entered into a table unless one or more columns of the table are specified with the UNIQUE integrity constraint. This could occur, for example, if Import were run twice.

COMMIT

Default: n

Specifies whether Import should commit after each array insert. By default, Import commits only after loading each table, and Import performs a rollback when an error occurs, before continuing with the next object.

CONSTRAINTS

Default: y

Specifies whether or not table constraints are to be imported. The default is to import constraints. If you do not want constraints to be imported, you must set the parameter value to n.

Note that primary key constraints for index-organized tables (IOTs) and object tables are always imported.

Źródła:

http://download.oracle.com/docs/cd/B10500_01/server.920/a96652/ch02.htm

http://www.dba-oracle.com/bk_disable_archive_log_mode.htm

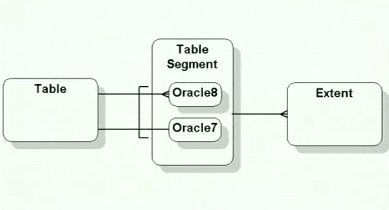

Oracle segments and extents. (F2)

data segments

rollback segments – store undo information (for rolling back database transactions, store data for read consistency)

index segments

temporaty segments – dynamicly created (sorting np. “order by”)

EXTENT – contiguous set of data blocks.

one to many relation (jeden segment może zawierać wiele extentow)

Oracle performance (K1)

Oracle began providing its own volume manager software for Linux and Windows in

Oracle9i Release 2. Since Oracle Database 10g, database releases for all supported

operating systems include a cluster file system and volume manager in the database

that is leveraged by ASM. When using ASM, it is recommended that you not try to

leverage an operating system volume manager.

Consider an Oracle database with an 8 KB data block size and the DB_FILE_

MULTIBLOCK_READ_COUNT initialization parameter set to 32. There will be two

sizes of I/O by Oracle: a single 8 KB data block and a 256 KB multiblock read (32 times

8 KB).

Two types of parallelism are possible within an Oracle database:

Block-range parallelism Driven by ranges of database blocks

ability to dynamically parallelize table scans and a variety of scan-based functions.Oracle7 implemented blockrange parallelism by dynamically breaking a table into pieces, each of which was a range of blocks, and then used multiple processes to work on these pieces in parallel.

Partition-based parallelism Driven by the number of partitions or subpartitions involved in the operation.

With partitioned tables, introduced in Oracle8, an operation may involve one, some, or all of the partitions of a partitioned table.

An Oracle instance has a pool of parallel execution (PE) processes that are available to the database users. Oracle8i introduced the notion of self-tuning adaptive parallelism. This feature automatically scales down parallelism as the system load increases and scales it back up as the load decreases.(193)

3 marca 2011

Oracle structures. (F2)

TABLESPACE -> SEGMENT -> EXTENTS -> DATA BLOCKS

PCTINCREASE – o jaki procent następny extent ma być większy od poprzedniego